Congress is contemplating a number of legislative packages to control AI. AI is a system that was launched globally with no security requirements, no menace modeling, and no actual oversight. A system that externalized danger onto the general public, created monumental safety vulnerabilities, after which acted stunned when criminals, hostile states, and unhealthy actors exploited it.

After the harm was achieved, the identical firms that constructed it instructed governments to not regulate—as a result of regulation would “stifle innovation.” As a substitute, they offered us cybersecurity merchandise, compliance frameworks, and risk-management providers to repair the issues they created.

Sure, synthetic intelligence is an issue. Wait…Oh, no sorry. That’s not AI.

That’s was Web. And it made the tech bros the richest ruling class in historical past.

And that’s why a few of us are just a bit skeptical when the identical tech bros are actually telling us: “Belief us, this time can be totally different.” AI can be totally different, that’s for certain. They’ll get even richer and so they’ll rip us off much more this time. To not point out constructing small nuclear reactors on authorities land that we paid for, monopolizing electrical grids that we paid for, and anticipating us to fill the panorama with huge energy strains that we’ll pay for.

The topper is that these libertines need no accountability for something, and so they need to seize management of the levers of presidency to cease any accountability. However there are some in Congress who’re severe about not getting fooled once more.

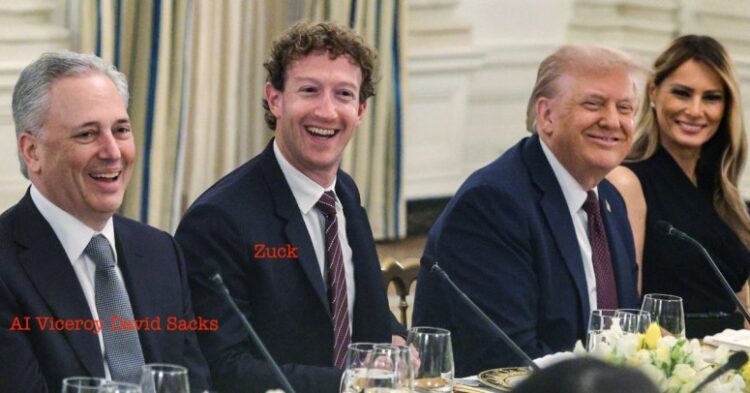

Senator Marsha Blackburn launched a abstract of laws she is sponsoring that offers us some trigger for hope (learn it right here courtesy of our mates on the Copyright Alliance). As a result of her invoice may be efficient, meaning Silicon Valley shills can be throughout it to attempt to water it down and, if in any respect attainable, destroy it. That assault of the shills has already began with Silicon Valley’s AI Viceroy within the Trump White Home, a man chances are you’ll by no means have heard of named David Sacks. Know that identify. Beware that identify.

Senator Blackburn’s invoice will do numerous good issues, together with for shielding copyright. However the first substantive part of Senator Blackburn’s abstract is a recreation changer. She would set up an obligation on AI platforms to be accountable for identified or predictable hurt that may befall customers of AI merchandise. That is typically referred to as a “responsibility of care.”

Her abstract states:

Place an obligation of care on AI builders within the design, improvement, and operation of AI platforms to stop and mitigate foreseeable hurt to customers. Moreover, this part requires:

• AI platforms to conduct common danger assessments of how algorithmic programs, engagement mechanics, and knowledge practices contribute to psychological, bodily, monetary, and exploitative harms.

• The Federal Commerce Fee (FTC) to promulgate guidelines establishing minimal affordable safeguards.

At its core, Senator Blackburn’s AI invoice tries to drive tech firms to play by guidelines that the majority different industries have adopted for many years: for those who design a product that predictably harms folks, you may have a accountability to repair it.

That concept is known as “merchandise legal responsibility.” Merely put, it means firms can’t promote harmful merchandise after which shrug it off when folks get harm. Sounds logical, proper? Appears like what you’d anticipate would occur for those who did the unhealthy factor? Automotive makers have to fret in regards to the well-known exploding gasoline tanks. Toy producers have to fret about choking hazards. Drug firms have to check uncomfortable side effects. Tobacco firms….effectively, you realize the remaining. The legislation doesn’t demand perfection—but it surely does demand affordable care and imposes a “responsibility of care” on firms that put harmful merchandise into the general public.

Blackburn’s invoice would apply that very same logic to AI platforms. Sure, the particular folks must comply with the identical guidelines as everybody else with no protected harbors.

As a substitute of treating AI programs as summary “speech” or impartial instruments, the invoice treats them as what they’re: merchandise with design decisions. These decisions that may foreseeably trigger psychological hurt, monetary scams, bodily hazard, or exploitation. Advice algorithms, engagement mechanics, and knowledge practices aren’t accidents. They’re engineered. At super expense. One factor you might be certain of is that if Google’s algorithms behave a sure method, it’s not as a result of the engineers ran out of improvement cash. The identical is true of ChatGPT, Grok, and so on. On a sure stage of actuality, that is very doubtless not guess work or predictability. It’s “identified” somewhat than “ought to have identified.” These folks know precisely what their algorithms do. And so they do it for the cash.

The invoice would impose that responsibility of care on AI builders and platform operators. An obligation of care is a fundamental authorized obligation to behave fairly to stop foreseeable hurt. “Foreseeable” doesn’t imply you’ll be able to predict the precise sufferer or second—it means you’ll be able to anticipate the kind of hurt that flows to customers you goal from how the system is constructed.

To make that responsibility actual, the invoice would require firms to conduct common danger assessments and make them public. These aren’t PR workouts. They must consider how their algorithms, engagement loops, and knowledge use contribute to harms like habit, manipulation, fraud, harassment, and exploitation.

They do that already, consider it. What’s totally different is that they don’t make it public, anymore than Ford made public the inner analysis that the Pinto’s gasoline tank was more likely to explode. In different phrases, platforms must look truthfully at what their programs truly do on this planet—not simply what they declare to do.

The invoice additionally directs the Federal Commerce Fee (FTC) to jot down guidelines establishing minimal affordable safeguards. That’s necessary as a result of it turns a imprecise obligation (“be accountable”) into enforceable requirements (“right here’s what you could do at a minimal”). Consider it as seatbelts and crash checks for AI programs.

So why do tech firms object? As a result of lots of them argue that their algorithms are protected by the First Modification—that regulating how suggestions work is regulating speech. Sure, that could be a load of crap. It’s not simply you, it truly is BS.

Think about Ford arguing that an exploding gasoline tank was “expressive conduct”—that drivers selected the Pinto to make an announcement, and due to this fact security regulation would violate Ford’s free speech rights. No court docket would take that severely. A gasoline tank isn’t an opinion. It’s an engineered element with identified dangers and dangers that had been identified to the producer.

AI platforms are the identical. When hurt flows from design choices—how content material is ranked, how customers are nudged, how programs optimize for engagement—that’s not speech. That’s product design. You may measure it, check it, audit it, which they do and make it safer which they don’t.

This a part of Senator Blackburn’s invoice issues as a result of platform design shapes tradition, careers, and livelihoods. Algorithms determine what will get seen, what will get buried, and what will get exploited. Blackburn’s invoice doesn’t remedy each downside, but it surely takes an necessary step: it says tech firms can’t disguise harmful merchandise behind free-speech rhetoric anymore.

Should you construct it, and it predictably hurts folks, you’re accountable for fixing it. That’s not censorship. It’s accountability. And folks like Marc Andreessen, Sam Altman, Elon Musk and David Sacks will hate it.

[This post first appeared on The Trichordist]